Crowdsourcing Historical Data

Today we’re going to consider an innovation in the digital humanities, and look at the practice of crowdsourcing historical data!

Academic research is increasingly crowdsourcing common coding tasks, usually combines judgements from multiple non-expert coders through the use of online platforms that distribute tasks to a large volume of (typically compensated) coders. For anyone interested in the use of this for research, there are some great articles on this method from here, here, and here.

Crowdsourcing can be used for a number of data-processing tasks, and increasingly on social media I’m seeing historical data being transcribed by teams of thousands of online volunteers. I noted an example of this practice on Broadstreet before, when the New York Public Library was leveraging crowdsourcing to transcribe historical menus. I just moved to the UK, and there’s even an annual volunteer event called “Transcription Tuesday” that crowdsources new historical data each year!

While this unique form of data entry probably won’t be suitable for all academic projects, there are still so many great examples and resources to learn from. The Smithsonian Digital Volunteers: Transcription Center, featured in the picture below and at https://transcription.si.edu, is one such example.

There is also a community there, where “volunpeers” can share their transcription experiences and give advice. Here there are some nice best practices — for example, one of the current projects involves data from the Bureau of Refugees, Freedmen, and Abandoned Lands in Virginia in the 19th century, and so volunteers are provided with guides on “Common Historic Conventions, Spellings, and Abbreviations” and “Freedmen's Bureau Staff Rosters, Style Sheets, and Abbreviations.”

The website also links to resources on how to decipher handwriting, including this gem called wordmine.info — a program that is a word search engine, that can help you guess the most likely words when you are missing characters. (Apparently it’s also good if you are stuck on crosswords!).

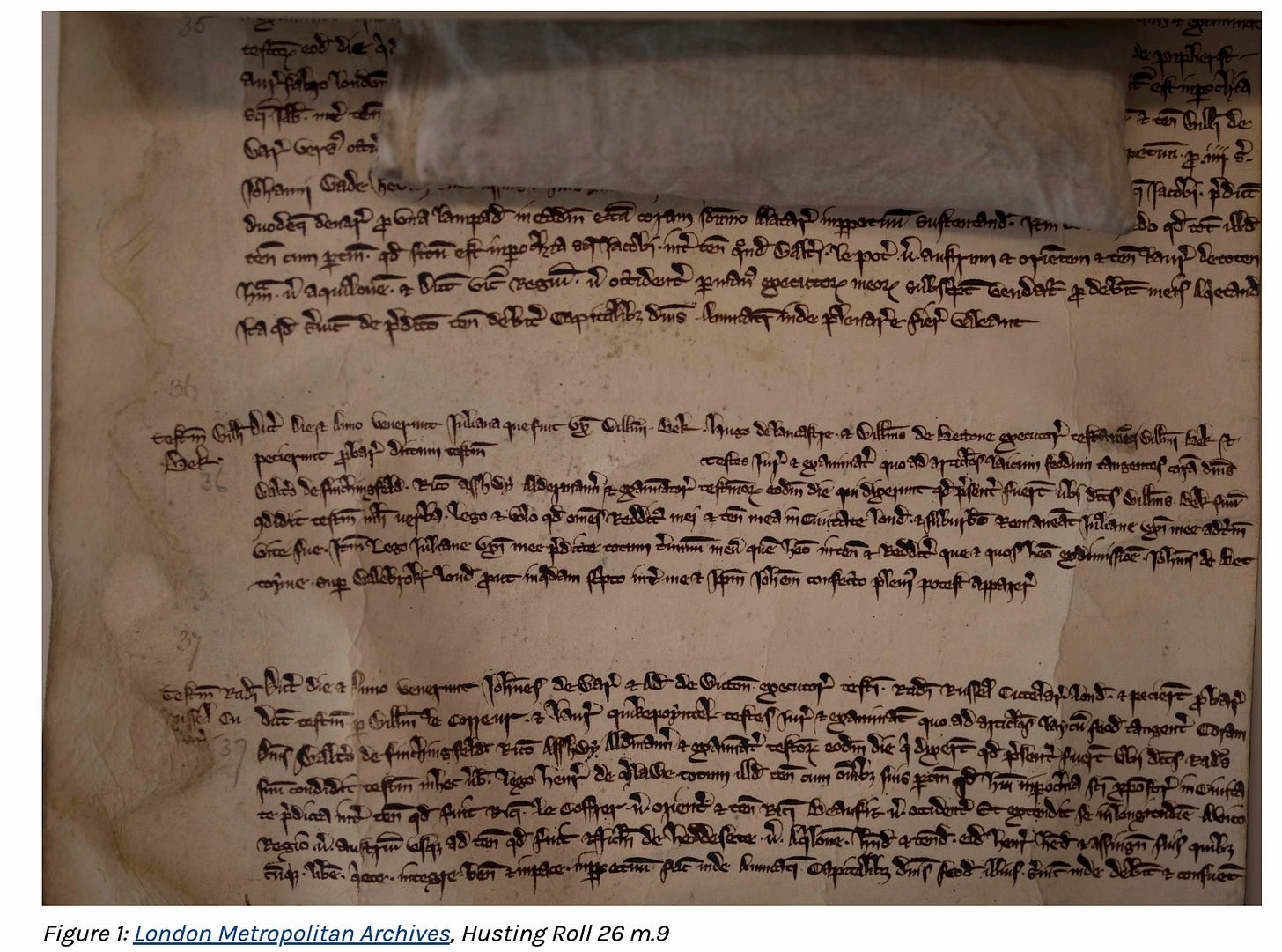

A second example of crowdsourcing historical data entry can be found in The Get to Know Medieval Londoners Project, that was run through the Institute of Historical Research. The project has now completed, but it involved their archive of thousands of property deeds from medieval London. The data is being uploaded to the Medieval Londoners Database, part of a project from Fordham University's Center for Medieval Studies. This database is an open-access, searchable collection of individuals who lived in London between 1100 and 1520.

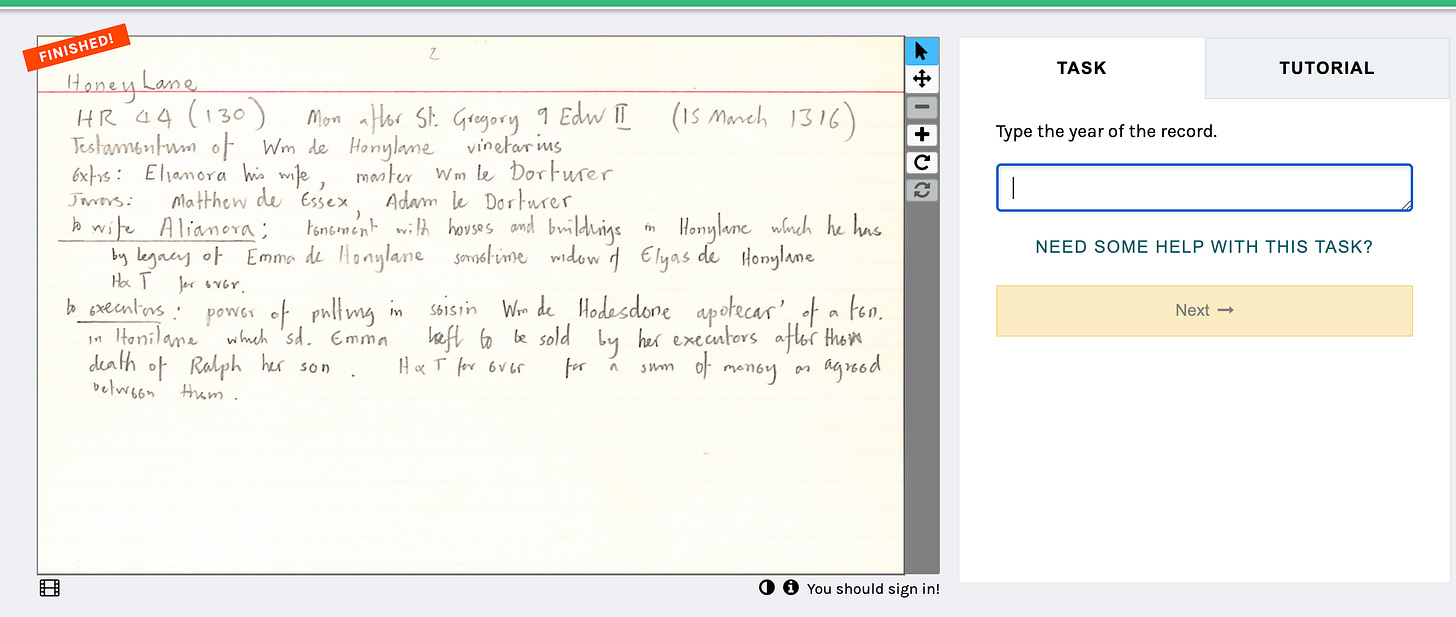

It’s useful to look at the website to see how it was set up. This project used a crowdsourcing online platform called Zooniverse, which presented the material needing transcription or coding alongside easy to fill out prompts that collect and store the data (along with guides, tutorials, etc). Zooniverse can host any project, and there are so many examples on the website — this is a great digital infrastructure resource, if you want to involve the public in data entry. (Another transcription platform example is Europeana Transcribe, for those interested).

It’s worth noting that volunteers were not transcribing medieval wills (which is a pretty specialized task, involving medieval handwriting) but were helping by processing handwritten records about the collection (see below). But the platform is very user friendly, with plenty of instructions and help in the case of questions.

Generally I think these are really neat initiatives, because they get people involved in history and leverage the skills of the public (my mother is a retired professor and genealogist; she would be great at deciphering handwriting, for example). This probably won’t be a common strategy for academics or researchers. The easier the task the better, with crowdsourcing (you don’t want volunteers to give up), but now easy data entry tasks can often be handled with other tools. Advances in AI and machine learning are making OCR at scale much easier and much quicker, while assembling an enthusiastic internet army of volunteers requires more infrastructure. It’s true historical data collection can still stump the robots — for those of us who are trying to transcribe centuries old handwritten documents with local language idiosyncrasies (hello, Polish witchcraft trials!), I could see how crowdsourcing native speakers might be useful? But at that point I think we turn to specifically trained transcribers.

But in any case, keep crowdsourcing in the back of your mind, and if you ever use it for historical data collection, come back to Broadstreet to tell us all about it!