Friends Don’t Let Friends Use Google NGrams

“Can societies collectively become more or less depressed over time?” A recent paper published in PNAS asks (and answers) that big, bold question.

The authors go looking for “markets of cognitive distortions” in the Google Books corpus (English, Spanish, and German languages), a corpus that covers more than a century of the written word. The distortions are 2-grams like “everyone thinks” or “still feels” and 3-grams like “I am a”. They find that the distortions have grown dramatically since the 1980s; apparently, we are more depressed (as a society) today than we were during the Great Depression or either World War.

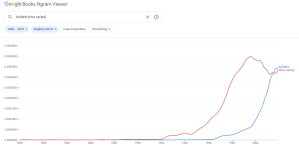

These are big claims and the Sagan standard should apply: extraordinary claims require extraordinary evidence. Figure 3 in the paper (above) plots z-scores in the prevalence of cognitive distortion n-grams from 1855 to 2020 and has a remarkable hockey stick pattern in English, Spanish, and German: after 1980 (or more clearly after 2000) rates of cognitive distortion n-grams are well above long term trends. There is even a “null model” which tracks the prevalence of randomly chosen n-grams (those that should be unrelated to societal cognitive distortions) that looks nice and flat. Compelling?

I think we learn something important from this study, but unfortunately for the authors, it isn’t whether or not societies can or did become more or less depressed over time. Benjamin Schmidt put it best on Twitter last month:

This PNAS article claiming to find a world-wide outbreak of depression since 2000 is shockingly bad. The authors don’t bother to understand the 2019 Google Books “corpus” a tiny bit; everything they find is explained by Google ingesting different books.https://t.co/nQjY3P0nAC

— Benjamin Schmidt (@benmschmidt) July 26, 2021

Everyone should go read his tweetstorm critique (my favorite kind of tweetstorm). But the basic idea is that you have to know your data. In this case, the Google Books corpus is neither a random sample of text nor is it a complete collection of all text. It is an idiosyncratic mix of sources that varies over time. And that mix in Google Books changed dramatically around 2000. Older books entered the corpus when Google scanned them in a library; newer books entered because publishers had agreements with Google.

For example, lots and lots of publishers are selling reprints of public domain books. Schmidt noted that the use of thou/thy/thee all spiked around 2016 but please don’t go writing a paper about how the Trump era made people nostalgic for archaic pronouns because of… whatever. I’m feeling guilty for even putting this half-idea into the universe.

So what is going on in the PNAS paper we started with? Well, one change Schmidt noted was a huge rise in fiction. And what sorts of phrases might be a lot more common in fiction than in legal treatises? Phrases about personal experience that might easily be coded as “cognitive distortions”. (According to Schmidt the words “toes” and “hair” also show up more in fiction which I suppose I’ll take his word for.)

It is fun to dunk on questionable research on Twitter and it is even more fun when the research isn’t by an economist (at least I hope none of the authors on the PNAS paper are economists). But there are a few lessons we can have along with our lolz. The first is quite general (I even try to indoctrinate my undergrads with it). Never trust a time series. There is just way, way, way too much else that could be changing at the same time to have much confidence in a single time series pattern. Time series figures can be suggestive or generative but if they are all the evidence you have, you probably need more evidence.

Second, I may be preaching a bit to the choir here (you are, after all, reading a blog about historical political economy), but there is no substitute for a deep understanding of your data. This is true whether you’re analyzing big data with machine learning tools or small data with means and medians or anything else. This goes a bit beyond garbage in, garbage out, as even good data can be misread or misunderstood by researchers who don’t take care with it: where did the data come from, who collected it and why, what do the questions or variables mean, and so on. Schmidt called this “dataset due diligence” and I like that term a lot.

Third, I’m starting to wonder what (if anything) we can learn from tracking the use of a word or words over time? If there is, I don’t think it will come from Google Trends. Along with Peter Organisciak and J. Stephen Downie, Schmidt built a tool called HathiTrust+Bookworm that is, in my view, a Google Trends for serious scholarship. The HT+BW platform enables researchers to plot phrase usage over time in the HathiTrust corpus but also enables filtering and grouping by textual metadata (Library of Congress classes, publication location, and more). OCR and metadata can be wrong but much sharper analysis is possible with HT+BW than with Google Trends. HT+BW also comes with a detailed introductory paper from Schmidt et al that even includes a section on “Selection biases and omissions”.

Finally, if you really do want to use textual data to study historical questions—and you should because there was a lot of text produced historically, even if only a very nonrandom sample of it was archived and saved—think about spatial variation to go along with temporal variation. HT+BW enables cuts by publication country or state but we might want to look beyond books. In his work with historical newspapers, Max Winkler does just that.

The local and geographical nature of newspapers is a key advantage relative to published books. Even more than today, newspapers historically were local affairs, both covering local stories and catering to local readerships. As Winkler argues in a recent paper, “language in local newspapers reflects the local culture.” If this is the case (and Winkler’s evidence is compelling) then the language used in local newspapers can enable analysis of textual data to move well beyond simple time series plots.

In a recent working paper with Sebastian Ottinger, Political Threat and Racial Propaganda, Winkler gives us a great example of the power of this approach. The biracial Populist Party threatened entrenched planter elites in the Democratic Party in the 1890s. Ottinger and Winkler trace the publication of “hate stories” in southern newspapers after the 1892 election. Racial propaganda grew but only in newspapers affiliated with the Democratic party. Moreover, the propaganda “worked” and drove up Democratic vote share in later elections, even long after the Populist Party faded away.